It seems that distributed version control has become a

somewhat hot topic lately. The latter two posts make the case that being able to work offline is extremely useful, both for road warriors and for users with less than ideal Internet access. Yes, this does seem like a pretty good motivation for distributed version control. Indeed, it was my laptop and my dialup connection (aside from sheer curiosity) that first got me using darcs two years ago. But now I have one of these fancy ADSL connections and a less need to travel or hack offline while doing so. Yet I continue to use and love darcs. I'm sure this is something that bzr, git, mercurial, etc users can attest to: yes, offline versioning is indeed a great feature, but there is something more.

Warning: this is a rather long post. My apologies to planet haskellers and other busy readers.one mechanism - many benefits

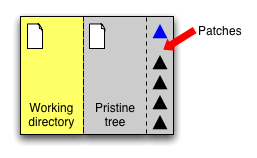

The thing that attracts me to a system like darcs is its conceptual elegance. From one single mechanism, you get the following features for free:

- Painless intialisation

- Offline versioning

- Branching and merging

- Easier collaboration with outsiders

These are all the same thing in the darcs world, no fanciness at work whatsoever. I suppose it's not very convincing to sell simplicity in itself, so in the rest of this post, I'm going to explore these four benefits of a distributed model and discuss what their implications might be for you the developer.

Painless initialisation

Getting started is easier because you don't have any central repositories to set up. That might sound awfully petty. After all, setting up a central repository is nothing more than a mkdir and cvs checkout. But it's much more of an inconvenience than you might think.

Setting up a central repository means you have to think in advance about putting your repository in the right place. You can't, for instance, set something up locally, change your mind, deciding that you want a server and switch over instantaneously. You COULD tarball your old repository, move it to the server, and either fiddle with your local configuration or checkout your repository again. But why should you? Why jump through all the hoops? The steps are simple, but they add friction. How many times have you NOT set up a repository for your code because it would have been a pain (a 30 second pain, but a pain nonetheless?). How many times have you put off a repository move because it was a pain? Painless initialisation means two things (1) instant gratification (2) the ability to change your mind. I would argue that such painlessness is crucial because it brings down the barrier of inconvenience to the point where you actually do the things you are supposed to do.

Branching and merging

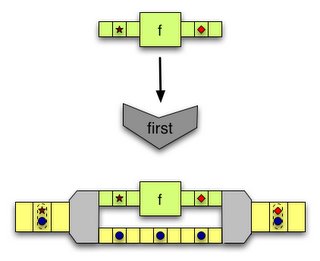

A well thought out distributed version control system does not need to have a notion of branching and merging? Why? Because a branch can simply exactly the same concept as a repository, as a checkout. No need to learn two sets of concepts of operations or two views of your version control universe. Just think of them as one and the same. Now, you might be worried about say, the redundancy of all this (gee! wouldn't that mean that branches take up a lot of space?)... but eh... details.

For starters, disk space is cheap, at least much cheaper than it was in the past. There might be cases where you are trying to version very large binary files, but for many programming jobs, we are only shuffling text around, so why worry? Besides, branches are supposed to be disposed of one day or another (merged), right? It's not like they're going to be that long lived, otherwise it's just a fork. Moreover, worrying about disk space is the version control system's job, not yours. You could have a VCS that tries very very hard to save space. For example, it could try to use hard links whenever possible, in much the same manner as "snapshot" backup systems. Disk space is not what you the programmer should be worrying about. It's similar to the case being made for having a second or third monitor: programmer time is more valuable than disk space.

Offline versioning

Previous posts have discussed this at length. It's still useful, even if your Internet connection is superb. It's useful because it lets you hold on to things that aren't quite ready for the central repository, but worth versioning until you're more confident about your code.

Collaboration with outsiders

Open source and free software projects thrive on contributions from outsiders. For example, in the past year, 80% of the 360 patches to the darcs repository have come from somebody other than David Roundy, the original author of darcs. I'm cheating a little bit because many of these patches are also from known insiders. Then again, all of the known insiders were outside contributors at some point. The switch from outsider to insider has been for the most part informal: you send enough patches in and people eventually get to know you. And that's it; very little in the way of formal processes.

Outsider collaboration is made easier for two reasons, offline versioning and decentralisation.

By offline versioning, I mean that people can make their modifications locally, retrieve changes from the central repository and still have their modifications intact, versioned and ready to go. Consider a popular project, like the mail client mutt. Some mutt users have patches that are useful for a few people, but not appropriate for the central repository. So they make their changes available in the form of a Unix patch. If you're lucky, the patch applies to the version of mutt that you've downloaded. If you're not so lucky, you've got some cleaning up to do and a new set of patches. I'm not talking about merging or conflict resolution, per se. Assume the conflict resolution requires human intervention. You've fixed things so that it compiles against the new version. What do you do exactly? Make a patch to the patched version? "Update" the original patch so that it works with the new version of the repository? And what about the original author, what does s/he do with your patch? These kinds of things are not so hard in themselves, but they are a major source of friction. They gum up the works of free software development, or any large project, open source or closed.

If you are a project maintainer, having a tool that handles offline versioning means that it is easier for you to accept changes from outsider contributors (zero insertion force - no need to apply patches and re-commit them).

If you a contributor, having an offline versioning tool means that it's easier for you to submit modifications to the project. You don't have manually create patches: you don't have to keep around a clean and working copy of the project, you don't have to worry about where you do your diffs (so that the patch --strip options come out right), you don't have to worry about what happens when the central repository changes and your patch no longer applies. Again, I'm not referring to conflict resolution. If there are conflicts, somebody will have to resolve them; but the resolution and versioning of these conflicts should involve as little bureaucracy as possible. For extra credit points, some version control systems even implement a "send" feature in which you submit your local modifications via email. The maintainers of the repository can then choose to apply the patch at their leisure. These aren't regular Unix diff patches, mind you, they are intelligent patches with all the version-tracking goodness built in.

Offline versioning adds convenience to the mix, a technical benefit. If you flip it around and look at it in terms of distributed controls, you can see some pretty subtle social consequences as well. Since there is no need for a central repository, there is a lot less pressure for the central maintainer to accept patches or reject them outright because you know that the outsider contributors can get along fine with their modifications safely versioned in their local repositories. Worst come to worse, the outside contributors can place their repositories online and have people work from there instead. It sounds like a fork, which sucks... but sometimes, fork happens. Look, sometimes you get forks for differences in opinion, disagreements between developers, or general unpleasantness. But sometimes you get more innocent forks, for example, the main developers suddenly got a new job and is now working 60 hours a week. S/he is still committed to the project, but to be honest s/he hasn't been looking at patches for the last month. No big deal, the rest of us will just be working from this provisional repository until the main developer gets back on his/her feet. There's a social and a technical aspect to forking. Distributed version control greatly simplifies the technical aspect, and that in turn mellows out the social one. Distributed version control means that life goes on.

simplicity and convenience

I'm really only making two points here. Simplicity matters. It reduces the learning curve for newbies and removes the need for experienced users to carry mental baggage around. Convenience matters. It reduces the friction that leads to put off the things you could be doing and it removes some of the technical barriers to wide-ranging collaboration. I could always be mistaken, of course. Perhaps there is some bigger picture, some forest to my trees; and upon discovering said forest I find myself deeply chagrined, getting all worked up over something so silly as patches. But until that time, I will continue to use darcs and love it for how much easier it makes my life.

It seems that distributed version control has become a

somewhat hot topic lately. The latter two posts make the case that being able to work offline is extremely useful, both for road warriors and for users with less than ideal Internet access. Yes, this does seem like a pretty good motivation for distributed version control. Indeed, it was my laptop and my dialup connection (aside from sheer curiosity) that first got me using darcs two years ago. But now I have one of these fancy ADSL connections and a less need to travel or hack offline while doing so. Yet I continue to use and love darcs. I'm sure this is something that bzr, git, mercurial, etc users can attest to: yes, offline versioning is indeed a great feature, but there is something more.

Warning: this is a rather long post. My apologies to planet haskellers and other busy readers.one mechanism - many benefits

The thing that attracts me to a system like darcs is its conceptual elegance. From one single mechanism, you get the following features for free:

- Painless intialisation

- Offline versioning

- Branching and merging

- Easier collaboration with outsiders

These are all the same thing in the darcs world, no fanciness at work whatsoever. I suppose it's not very convincing to sell simplicity in itself, so in the rest of this post, I'm going to explore these four benefits of a distributed model and discuss what their implications might be for you the developer.

Painless initialisation

Getting started is easier because you don't have any central repositories to set up. That might sound awfully petty. After all, setting up a central repository is nothing more than a mkdir and cvs checkout. But it's much more of an inconvenience than you might think.

Setting up a central repository means you have to think in advance about putting your repository in the right place. You can't, for instance, set something up locally, change your mind, deciding that you want a server and switch over instantaneously. You COULD tarball your old repository, move it to the server, and either fiddle with your local configuration or checkout your repository again. But why should you? Why jump through all the hoops? The steps are simple, but they add friction. How many times have you NOT set up a repository for your code because it would have been a pain (a 30 second pain, but a pain nonetheless?). How many times have you put off a repository move because it was a pain? Painless initialisation means two things (1) instant gratification (2) the ability to change your mind. I would argue that such painlessness is crucial because it brings down the barrier of inconvenience to the point where you actually do the things you are supposed to do.

Branching and merging

A well thought out distributed version control system does not need to have a notion of branching and merging? Why? Because a branch can simply exactly the same concept as a repository, as a checkout. No need to learn two sets of concepts of operations or two views of your version control universe. Just think of them as one and the same. Now, you might be worried about say, the redundancy of all this (gee! wouldn't that mean that branches take up a lot of space?)... but eh... details.

For starters, disk space is cheap, at least much cheaper than it was in the past. There might be cases where you are trying to version very large binary files, but for many programming jobs, we are only shuffling text around, so why worry? Besides, branches are supposed to be disposed of one day or another (merged), right? It's not like they're going to be that long lived, otherwise it's just a fork. Moreover, worrying about disk space is the version control system's job, not yours. You could have a VCS that tries very very hard to save space. For example, it could try to use hard links whenever possible, in much the same manner as "snapshot" backup systems. Disk space is not what you the programmer should be worrying about. It's similar to the case being made for having a second or third monitor: programmer time is more valuable than disk space.

Offline versioning

Previous posts have discussed this at length. It's still useful, even if your Internet connection is superb. It's useful because it lets you hold on to things that aren't quite ready for the central repository, but worth versioning until you're more confident about your code.

Collaboration with outsiders

Open source and free software projects thrive on contributions from outsiders. For example, in the past year, 80% of the 360 patches to the darcs repository have come from somebody other than David Roundy, the original author of darcs. I'm cheating a little bit because many of these patches are also from known insiders. Then again, all of the known insiders were outside contributors at some point. The switch from outsider to insider has been for the most part informal: you send enough patches in and people eventually get to know you. And that's it; very little in the way of formal processes.

Outsider collaboration is made easier for two reasons, offline versioning and decentralisation.

By offline versioning, I mean that people can make their modifications locally, retrieve changes from the central repository and still have their modifications intact, versioned and ready to go. Consider a popular project, like the mail client mutt. Some mutt users have patches that are useful for a few people, but not appropriate for the central repository. So they make their changes available in the form of a Unix patch. If you're lucky, the patch applies to the version of mutt that you've downloaded. If you're not so lucky, you've got some cleaning up to do and a new set of patches. I'm not talking about merging or conflict resolution, per se. Assume the conflict resolution requires human intervention. You've fixed things so that it compiles against the new version. What do you do exactly? Make a patch to the patched version? "Update" the original patch so that it works with the new version of the repository? And what about the original author, what does s/he do with your patch? These kinds of things are not so hard in themselves, but they are a major source of friction. They gum up the works of free software development, or any large project, open source or closed.

If you are a project maintainer, having a tool that handles offline versioning means that it is easier for you to accept changes from outsider contributors (zero insertion force - no need to apply patches and re-commit them).

If you a contributor, having an offline versioning tool means that it's easier for you to submit modifications to the project. You don't have manually create patches: you don't have to keep around a clean and working copy of the project, you don't have to worry about where you do your diffs (so that the patch --strip options come out right), you don't have to worry about what happens when the central repository changes and your patch no longer applies. Again, I'm not referring to conflict resolution. If there are conflicts, somebody will have to resolve them; but the resolution and versioning of these conflicts should involve as little bureaucracy as possible. For extra credit points, some version control systems even implement a "send" feature in which you submit your local modifications via email. The maintainers of the repository can then choose to apply the patch at their leisure. These aren't regular Unix diff patches, mind you, they are intelligent patches with all the version-tracking goodness built in.

Offline versioning adds convenience to the mix, a technical benefit. If you flip it around and look at it in terms of distributed controls, you can see some pretty subtle social consequences as well. Since there is no need for a central repository, there is a lot less pressure for the central maintainer to accept patches or reject them outright because you know that the outsider contributors can get along fine with their modifications safely versioned in their local repositories. Worst come to worse, the outside contributors can place their repositories online and have people work from there instead. It sounds like a fork, which sucks... but sometimes, fork happens. Look, sometimes you get forks for differences in opinion, disagreements between developers, or general unpleasantness. But sometimes you get more innocent forks, for example, the main developers suddenly got a new job and is now working 60 hours a week. S/he is still committed to the project, but to be honest s/he hasn't been looking at patches for the last month. No big deal, the rest of us will just be working from this provisional repository until the main developer gets back on his/her feet. There's a social and a technical aspect to forking. Distributed version control greatly simplifies the technical aspect, and that in turn mellows out the social one. Distributed version control means that life goes on.

simplicity and convenience

I'm really only making two points here. Simplicity matters. It reduces the learning curve for newbies and removes the need for experienced users to carry mental baggage around. Convenience matters. It reduces the friction that leads to put off the things you could be doing and it removes some of the technical barriers to wide-ranging collaboration. I could always be mistaken, of course. Perhaps there is some bigger picture, some forest to my trees; and upon discovering said forest I find myself deeply chagrined, getting all worked up over something so silly as patches. But until that time, I will continue to use darcs and love it for how much easier it makes my life.

distributed chiming in